Emerging Faces - A Collaboration with 3D (aka Robert Del Naja of Massive Attack)

Have been working on and off for past several months with Bristol based artist 3D (aka Robert Del Naja, the founder of Massive Attack). We have been experimenting with applying GANs, CNNs, and many of my own artificial intelligent algorithms to his artwork. I have long been working at encapsulating my own artistic process in code. 3D and I are now exploring if we can capture parts of his artistic process.

It all started simply enough with looking at the patterns behind his images. We started creating mash-ups by using CNNs and Style Transfer to combine the textures and colors of his paintings with one another. It was interesting to see what worked and what didn't and to figure out what about each painting's imagery became dominant as they were combined

As cool as these looked, we were both left underwhelmed by the symbolic and emotional aspects of the mash-ups. We felt the art needed to be meaningful. All that was really be combined was color and texture, not symbolism or context. So we thought about it some more and 3D came up with the idea of trying to use the CNNs to paint portraits of historical figures that made significant contributions to printmaking. Couple of people came to mind as we bounced ideas back and forth before 3D suggested Martin Luther. At first I thought he was talking about Martin Luther King Jr, which left me confused. But then when I realized he was talking about the the author of The 95 Theses and it made more sense. Not sure if 3D realized I was confused, but I think I played it off well and he didn't suspect anything. We tried applying CNNs to Martin Luther's famous portrait and got the following results.

It was nothing all that great, but I made a couple of paintings from it to test things. Also tried to have my robots paint a couple of other new media figures like Mark Zuckerberg.

Things still were not gelling though. Good paintings, but nothing great. Then 3D and I decided to try some different approaches.

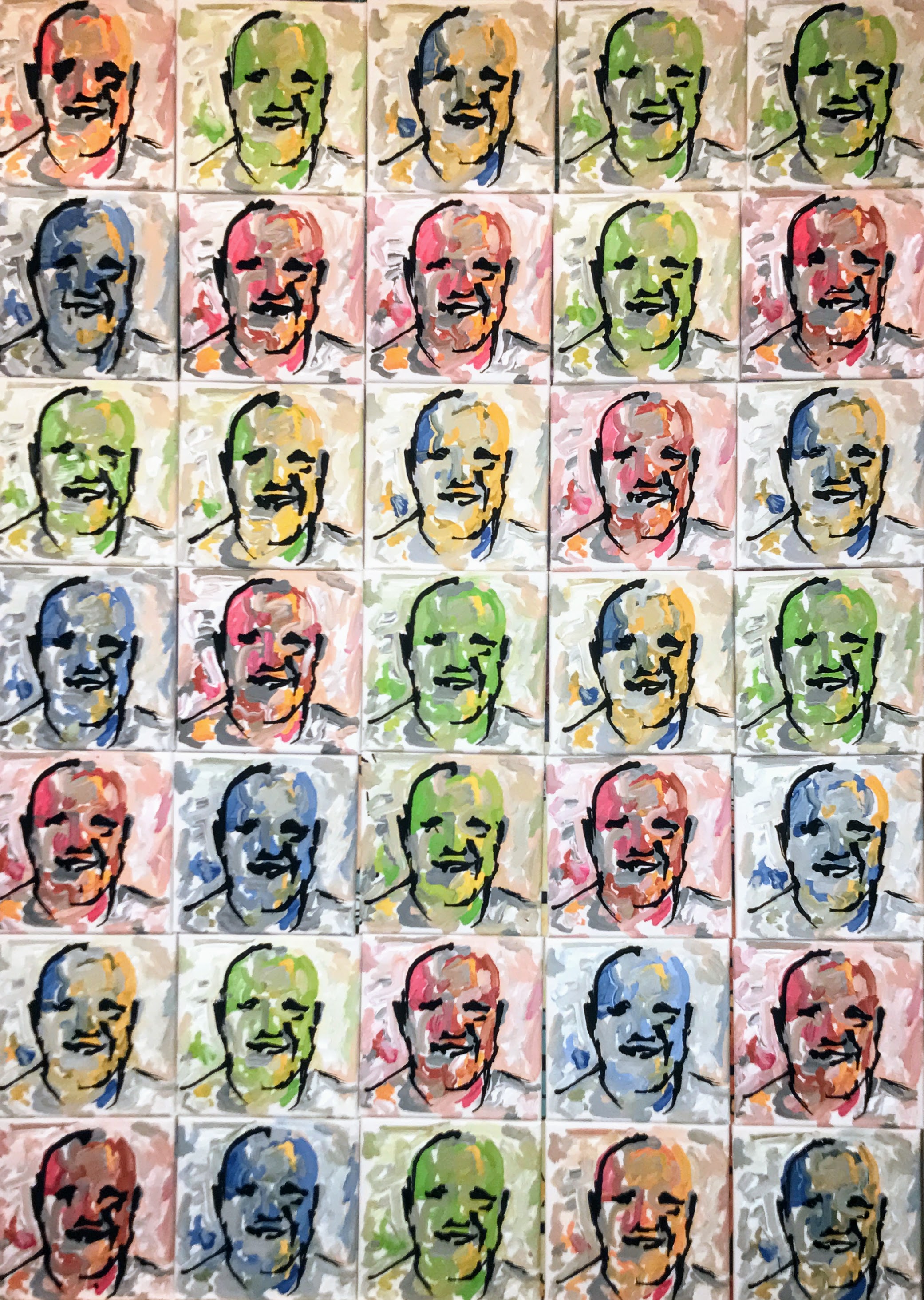

I showed him some GANs where I was working on making my robots imagine faces. Showed him how a really neat part of the GAN occurred right at the beginning when faces emerge from nothing. I also showed him a 5x5 grid of faces that I have come to recognize as a common visualization when implementing GANs in tutorials. We got to talking about how as a polyptych, it recalled a common Warhol trope except that there was something different. Warhol was all about mass produced art and how cool repeated images looked next to one another. But these images were even cooler, because it was a new kind of mass production. They were mass produced imagery made from neural networks where each image was unique.

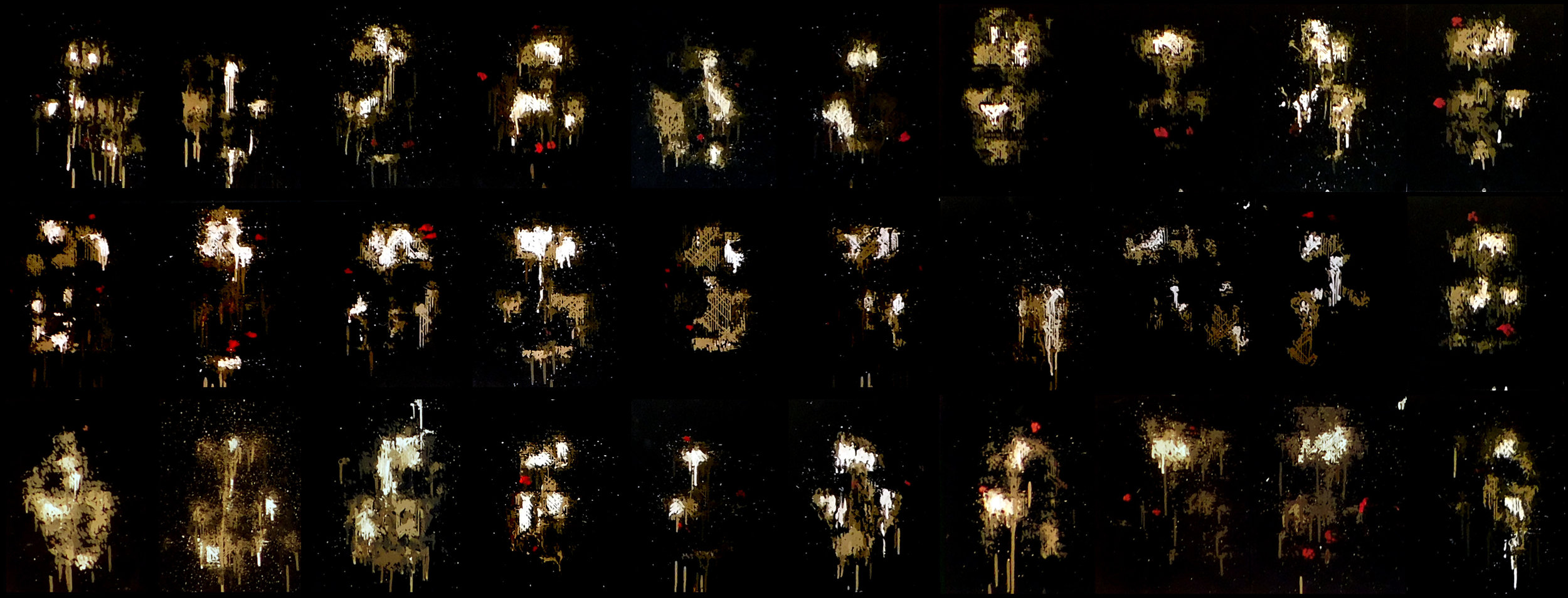

I started having my GANs generate tens of thousands of faces. But I didn't want the faces in too much detail. I like how they looked before they resolved into clear images. It reminded me of how my own imagination worked when I tried to picture things in my mind. It is foggy and non descript. From there I tested several of 3D's paintings to see which would best render the imagined faces.

3D's Beirut (Column 2) was the most interesting, so I chose that one and put it and the GANs into the process that I have been developing over the past fifteen years. A simplified outline of the artificially creative process it became can be seen in the graphic below.

My robots would begin by having the GAN imagine faces. Then I ran the Viola-Jones face detection algorithm on the GAN images until it detected a face. At that point, right when the general outlines of faces emerged, I stopped the GAN. Then I applied a CNN Style Transfer on the nondescript faces to render them in the style of 3D's Beirut. Then my robots started painting. The brushstroke geometry was taken out of my historic database that contains the strokes of thousands of paintings, including Picassos, Van Goghs, and my own work. Feedback loops refined the image as the robot tried to paint the faces on 11"x14" canvases. All told, dozens of AI algorithms, multiple deep learning neural networks, and feedback loops at all levels started pumping out face after face after face.

Thirty-two original faces later it arrived at the following polyptych which I am calling the First Sparks of Artificial Creativity. The series itself is something I have begun to refer to as Emerging Faces. I have already made an additional eighteen based on a style transfer of my own paintings, and plan to make many more.

Above is the piece in its entirety as well as an animation of it working on an additional face at an installation in Berlin. You can also see a comparison of 3D's Beirut to some of the faces. An interesting aspect of the artwork, is that despite how transformative the faces are from the original painting, the artistic DNA of the original is maintained with those seemingly random red highlights.

It has been a fascinating collaboration to date. Looking forward to working with 3D to further develop many of the ideas we have discussed. Though this explanation may appear to express a lot of of artificial creativity, it only goes into his art on a very shallow level. We are always talking and wondering about how much deeper we can actually go.

Pindar

Stand Up Comedy at the Aspen AI Conference

Had my most enjoyable talk yet at the Aspen Institute AI Conference in Berlin. I consider it a success because people are finally laughing at all my bad jokes. It has taken me a dozen talks to get the timing down, but I think I finally got it. And let's face it, who really cares about all the AI nonsense I am talking about? All anyone really cares about in these talks are the jokes. You could get up on stage at one of these event, know nothing about AI and Creativity, but if you get everyone laughing, they will all think you had the best talk of the conference.

In addition to my stand up comedy routine, also had my robots continuing to paint these ghost faces. Enjoying the series and looking forward to the next steps.

My Dinner with Cyborg Neil Harbisson

One of the highlights of my recent trip to speak at the MBN Y Forum in Korea, was speaking on a panel at the VIP dinner with Cyborg Neil Harbisson. As far as artists go, Harbisson is about as avante garde as they come. That antenna you see hovering over his head is beyond your typical IOT wearable. It is what Harbisson considers an extrasensory organ that helps him detect colors outside the visible spectrum. And he more than just wears it well, it is surgically implanted into his skull.

During the dinner I think I asked more questions for him than the rest of the room combined. When flash photography went off, I would ask him if there were any extra colors. I was also curious what the sensation of the infrared and ultraviolet colors felt like. Also asked him if he felt he was missing things when his cyborg appendage was off, to which I learned he could not turn it off anymore than we could turn off our own natural senses. He answered all my question patiently, though I hope I was not too annoying. But he was just too fascinating to not harrass. Harbisson was attempting to give his body more senses than he was born with. I had to learn as much as I could from him.

So I am not sure if I consider him a robotic artist in the traditional sense, except that he himself is attempting to become a robot. He has begun championing cyborg rights and is serving as a visionary to help ease what he considers is our inevitable evolution into cyborgs. Think about what he is championing and things become very interesting. In his talk he pointed out that never before in history have we had control over our evolution, but now we do. And not just on a mechanical level, but on a biological level as well. Next 20-30 years will be interesting times.

Pindar

Talking Artificial Creativity in Korea

Just returned from an amazing event in Seoul, the MBN Y Forum. Gave a talk where I discussed how over the past 15 years my robots have gone from being over-engineered printers to semi-autonomous creative machines.

The thumbnail for this video of my talk is my favorite photo of the event taken by my wife. I have to say, I really like being featured on a large format screen as I talk about art in front of thousands of people. Here is another stitched together panorama shot of the event.

And finally for the substance, here

More Ghostlike Faces Imagined by my Robots

My series of portraits painted by my robot continues with these 32 ghost images. Calling the series Emerging Faces. Will be discussing how and why my robots are thinking these up and painting them in Korea next week. Will post a more detailed account here shortly. Here are some closer looks at some of my favorite images...

Pindar

Ghosts From the Darkness

Been painting dozens and dozens of portraits of faces imagined by a GAN I implemented. These are haunting. I am fascinated as the faces begin emerging from the algorithm and try to capture them right as they begin to take shape and become recognizable as faces. Here are two recent paintings...

The First Sparks of Artificial Creativity

My robots paint with dozens of AI algorithms all constantly fighting for control. I imagine that our own brains are similar and often think of Minsky's Society of Minds. Where he theorizes our brains are not one mind, but many, all working with, for, and against each other. This has always been an interesting concept and model for creativity for me. Much of my art is trying to create this mish mosh of creative capsules all fighting against one another for control of an artificially creative process.

Some of my robots' creative capsules are traditional AI. They use k-means clustering for palette reduction, viola-jones for facial recognition, hough lines to help plan stroke paths, among many others. On top of that there are some algorithms that I have written myself to do things like try to measure beauty and create unique compositions. But the really interesting stuff that I am working with uses neural networks. And the more I use neural networks, the more I see parallels between how these artificial neurons generate images and how my own imagination does.

Recently I have seen an interesting similarity between how a specific type of neural network called a Generative Adversarial Network (GAN) imagines unique faces compared to how my own mind does. Working and experimenting with it, I am coming closer and closer to thinking that this algorithm might just be a part of the initial phases of imagination, the first sparks of creativity. Full disclosure before I go on, I say this as an artist exploring artificial creativity. So please regard any parallels I find as an artist's take on the subject. What exactly is happening in our minds would fall under the expertise of a neuroscientist and modeling what is happening falls in the realm of computational neuroscience, both of which I dabble in, but am by no means an expert.

Now that I have made clear my level of expertise (or lack thereof), there is actually an interesting thought experiment that I have come up with that helps illustrate the similarities I am seeing between how we imagine faces compared to how GANs do. For this thought experiment I am going to ask you to imagine a familiar face, then I am going to ask you to get creative and imagine an unfamiliar face. I will then show you how GANs "imagine" faces. You will then be able to compare what went on in your own head with what went on in the artificial neural network and decide for yourself if there are any similarities.

Simple Mental Task - Imagine a Face

So the first simple mental task is to imagine the face of a loved one. Clear your mind and imagine a blank black space. Now pull an image of your loved out of the darkness until you can imagine a picture of them in your mind's eye. Take a mental snapshot.

Creative Mental Task - Imagine an Unfamiliar Face

The second task is to do the exact same thing, but by imagining someone you have never seen before. This is the creative twist. I want you to try to imagine a face you have never seen. Once again begin by clearing your mind until there is nothing. Then out of the darkness try to pull up an image of someone you have never seen before. Take a second mental snapshot.

This may have seemed harder, but we do it all the time when we do things like imagine what the characters of a novel might look like, or when we imagine the face of someone we talk to on the phone with, but have yet to meet. We are somehow generating these images in our mind, though it is not clear how because it happens so fast.

How Neural Nets Imagine Unfamiliar Faces

So now that you have tried to imagine an unfamiliar face, it is neat to see how neural networks try to do this. One of the most interesting methods involves the GANs I have been telling you about. GANs are actually two neural nets competing against one another, in this case to create images of unique faces from nothing. But before I can explain how two neural nets can imagine a face, I probably have to give a quick primer on what exactly a neural net is.

The simplest way to think about an artificial neural network is to compare it to our brain activity. The following images show actual footage of live neuronal activity in our brain (left) compared to numbers cascading through an artificial neural network (right).

Live Neuronal Activity - courtesy of Michelle Kuykendal & Gareth Guvanasen

Artificial Neural Network

Our brains are a collection of more than a billion neurons with trillions of synapses. The firing of the neurons seen in the image on the left and the cascading of electrical impulses between them is basically responsible for everything we experience, every pattern we notice, and every prediction our brain makes.

The small artificial neural networks shown on the right is a mathematical model of this brain activity. To be clear it is not a model of all brain activity, that is computational neuroscience and much more complex, but it is a simple model of at least one type of brain activity. This artificial neural network in particular, is small collection of 50 artificial neurons with 268 artificial synapses where each artificial neuron is a mathematical function and each artificial synapses is a weighted value. These neural nets simulate neuronal activity by sending numbers through the matrix of connections converting one set of numbers to another. These numbers cascade through the artificial neural net similarly to how electrical impulses cascade through our minds. In the animation on the right, instead of showing the numbers cascading, I have shown the nodes and edges lighting up and when the numbers are represented like this, one can see the similarities between live neuronal activity and artificial neural networks.

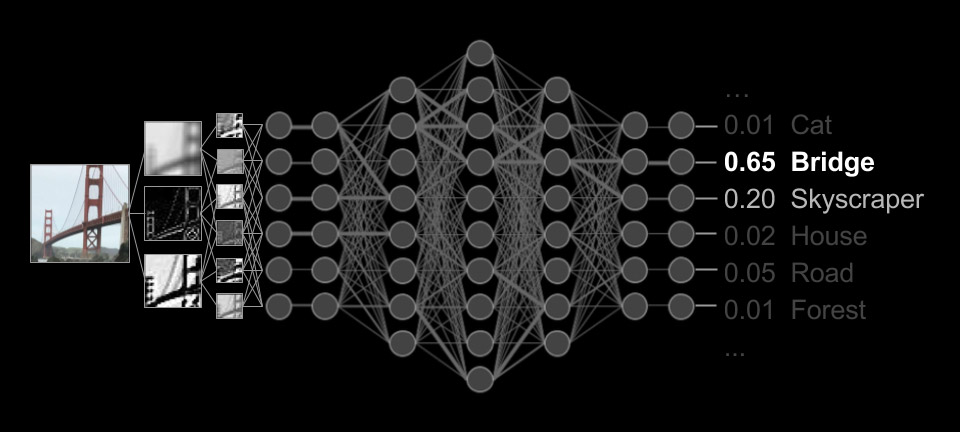

While it may seem abstract to think how this could work, the following graphic shows one if its popular applications. In this convolutional neural network an image is converted into pixel values, these numbers then enter the artificial neural network on one side, go through a lot of linear algebra, and eventually comes out the other side as a classification. In this example, an image of a bridge is identified as a bridge with 65% certainty.

With this quick neural network primer, it is now interesting to go into more details of a face creating Generative Adversarial Network, which is two opposing neural nets. When these neural nets are configured just right, they can be pretty creative. Furthermore, closely examining how they work, I can't help but wonder if some structure similar to them is in our minds at the very beginning of when we try to imagine unfamiliar faces.

So here is how these adversarial neural nets fight against each other to generate faces from nothing.

The first of the two neural nets is called a Discriminator and it has been shown thousands of faces and understands the patterns found in typical faces. This neural net would master the first simple mental task I gave you. Same as how you could pull the face of a loved one into your imagination, this neural net knows what thousands of faces looks like. Perhaps more importantly, however, when shown a new image, it can tell you whether or not that image is a face. This is the Discriminator's most important task in a GAN. It can discriminate between images of faces and images that are not faces, and also give some hints as to why it made that determination.

The second neural nets in a GAN is called a Generator. And while the Discriminator knows what thousands of faces looks like, this Generator is dumb as a bag of hammers. It doesn't know anything. It begins as a matrix of completely random numbers.

So here they are at the very beginning of the process ready to start imagining faces.

First thing that happens is the Generator guesses at what a face looks like and asks the Discriminator if it thinks the image is a faces or not. But remember the Generator is completely naive and filled with random weights, so it begins by creating an image of random junk.

When determining whether or not this is a face, the Discriminator is obviously not fooled. The image looks nothing like faces. So it tells the Generator that the image looks nothing like faces, but at the same time give some hints about how to makes its next attempt a little more facelike. This is one of the really important steps. Beyond just telling the Generator that it failed to make a face, the Discriminator is also telling it what parts of the image worked, and the Generator is taking this input and changing itself before making the next attempt.

The Generator adjusts the weights of its neural network and 120 tries, rejections, and hints from the Discriminator later, it is still just static, but better static...

But then at attempt 200. ghosts images start to emerge out of the darkness...

and with each guess by the Generator, the images get more and more facelike...

at attempt 400,

600,

1500,

and 4000.

After 4,000 attempts, rejections, and corrections from the Discriminator, the Generator actually gets pretty good at making some pretty convincing faces. Here is an animation from the very first attempt to the 4,000th iteration in 10 seconds. Keep in mind that the Generator has never seen or been shown a face.

So How Does this Compare to How We Imagined Faces?

Early on we did the thought experiment and I told you that there would be similarities between how this GAN imagined faces and you did. Well hopefully the above animation is not how you imagined an unfamiliar faces. If it was, well, you are probably a robot. Humans don't think like this, at least I don't.

But let's slow things down and look at what happened with the early guessing (between the Generators 180th and 400th attempts).

This animation starts with darkness as nondescript faces slowly bubble out of nothing. They merge into one another, never taking on a full identity.

I am not saying that this was the entirety of my creative process. Nor am I saying this is how the human brain generates images, though I am curious what a neuroscientist would think about this. But when I tried to imagine an unfamiliar face, I cleared my mind and an image appeared from nothing. Even though it happens fast and I can not figure out the mechanisms doing it, it has to start forming from something. This leads me to wonder if a GAN or some similar structure in my mind began by comparing random thoughts in one part of my mind to my memory of how all my friends look in another part. I wonder if from this comparison my brain was able to bring an image out of nothing and into vague blurry fog, just like in this animation.

I think this is the third time that I am making the disclaimer that I am not a neuroscientist and do not know what exactly is happening in my mind. I wonder if any neuroscientist does actually. But I do know that our brains, like my painting robots, have many different ways of performing tasks and being creative. GANs are by no means the only way, or even the most important part of artificial creativity, but looking at it as an artist, it is a convincing model for how imagination might be getting its first sparks of inspiration. This model applies to all manner of creative tasks beyond painting. It might be how we first start imagining a new tune, or even come up with a new poem. We start with base knowledge, try to come up with random creative thoughts, compare those to our base knowledge, and adjust as needed over and over again. Isn't this creativity? And if this is creativity, GANs are an interesting model of the very first steps.

I will leave you here with a series of GAN inspired paintings where my robots have painted the ghostlike faces just as they were emerging from the darkness...

Emerging Faces, 110"x42", Acrylic on Canvas, Pindar Van Arman w/ CloudPainter

Pindar

Converting Art to Data

There is something gross about breaking a masterpiece down into statistics, but there is also something profoundly beautiful about it.

Reproduced Cezanne's Houses at the L'Estaque with one of my painting robots using a combination of AI and personal collaboration. One of the neat things about using the robot in these recreations, is that it saves each and every brush stroke. I can then go back and analyze the statistics behind the recreation. Here are some quick visualizations...

It is weird to think of something as emotional as art, as data. But the more I work with combining the arts with artificial intelligence, the more I am beginning to think that everything is data.

Below is the finished painting and an animation of each brush stroke.

Simon Colton and The Painting Fool

The Painting Fool - Mood: reflective. Desired quality: simple.

Avoiding Uncreative Behavior

Was excited to recently make contact with Simon Colton, the artist and developer behind The Painting Fool. After brief twitter chat with him, heard about his thoughts on the criteria that made things "uncreative". If I understood him correctly, it is not so much that he is trying to make software creative, but that he is trying to avoid things that could be thought of as "uncreative", such as random number generation.

He directed me to one of his articles that went into details on this. What I read was really interesting.

The work begins with a rather elegant definition of Computational Creativity that I agree with.

Computational Creativity: The philosophy, science and engineering of computational systems which, by taking on particular responsibilities, exhibit behaviours that unbiased observers would deem to be creative.

There are many interesting thoughts throughout rest of paper, but the two concepts that I found most relevant were that

1) artificially creative systems should avoid randomness

and

2) they should attempt to frame, or give context to, what they are creating.

He begins by criticizing the over reliance on random number generation in computationally creative systems. Using the example of poetry, Colton writes that software could use random number generation to create a poem with "exactly the same letters in exactly the same order as one penned by a person." But despite fact that both works are identical and read identically, the poem created with random numbers is meaningless by comparison to the poem written by a person.

Why?

Well there are lots of reasons, but Colton elaborates on this to emphasize the importance of framing the artwork, where

"Framing is a term borrowed from the visual arts, referring not just to the physical framing of a picture to best present it, but also giving the piece a title, writing wall text, penning essays and generally discussing the piece in a context designed to increase its value."

In more detail he goes on to say...

"We advocate a development path that should be followed when building creative software: (i) the software is given the ability to provide additional, meta-level, information about its process and output, e.g., giving a painting or poem a title (ii) the software is given the ability to write commentaries about its process and its products (iii) the software is given the ability to write stories – which may involve fictions – about its processes and products, and (iv) the software is given the ability to engage in dialogues with people about what it has produced, how and why. This mirrors, to some extent, Turing’s original proposal for an intelligence test."

This view is really interesting to me.

In my own attempts at artificial creativity, I have always tried to follow both of these ideas. I avoid relying on random number generation to achieve unexpected results. And even though this has long been my instinct, I have never been able to articulate the reason why as well as Colton does in this writing. Given the importance of a creative agent to provide a frame for why and how each creative decision was made, random number generation is a meaningless reason to do something, which in effect takes away from the meaning of a creation.

Imagine being struck by the emotional quality of a color palette in an artwork then asking the artist why they chose that particular color palette. If the artist's response was, "It was simple really. I just rolled a bunch of dice and let them decide on which color I painted next." The emotional quality of the color palette would evaporate leaving us feeling empty and cheated that we were emotionally moved by randomly generated noise.

With this reading in mind and the many works of Simon Colton and The Painting Fool, I will continue to try and be as transparent with the decision making process of my creative robots as possible. Furthermore, while I do try to visually frame their decision making processes with timelapses of each painting from start to finish, I am now going to look at ways to verbally frame them. Will be challenging, but it is probably needed.

If you want to see more of Simon Colton and The Painting Fool's work check out the You Can’t Know my Mind exhibition from 2013.

Can robots be creative? They Probably Already Are...

In this video I demonstrate many of the algorithms and approaches I have programmed into my painting robots in an attempt to give them creative autonomy. I hope to demonstrate that it is no longer a question of whether machines can be creative, but only a debate of whether their creations can be considered art.

So can robot's make art?

Probably not.

Can robots be creative?

Probably, and in the artistic discipline of portraiture, they are already very close to human parity.

Pindar Van Arman

Are My Robots Finally Creative?

After twelve years of trying to teach my robots to be more and more creative, I think I have reached a milestone. While I remain the artist of course, my robots no longer need any input from me to create unique original portraits.

I will be releasing a short video with details shortly, but as can be seen in the slide above from a recent presentation, my robots can "imagine" faces with GANs, "imagine" a style with CANs, then paint the imagined face in the imagined style using CNNs. All the while evaluating its own work and progress with Feedback Loops. Furthermore, the Feedback Loops can use more CNNs to understand context from its historic database as well as find images in its own work and adjust painting both on a micro and macro level.

This process is so similar to how I paint portraiture, that I am beginning to question if there is any real difference between human and computational creativity. Is it art? No. But it is creative.

Artobotics - Robotics Portraits

While computational creativity and deep learning has become a focus of many of my robotics paintings, sometimes I just like to make something I am calling artobotic paintings, or artobotics.

With these paintings I have one of my robots paint relatively quick portraits, but not just one, dozens of them. The following is a large scale portrait of a family that was painted by one of my robots over the course of a week.

HBO Vice Piece on CloudPainter - The da Vinci Coder

Typically the pun applied to artistic robots make me cringe, but I actually liked HBO Vice's name for their segment on CloudPainter. they called me The Da Vinci Coder.

Spent the day with them couple of weeks ago and really enjoyed their treatment of what I am trying to do with my art. Not sure how you can access HBO Vice without HBO, but if you can it is a good description of where the state of the art is with artificial creativity. If you can't, here are some stills from the episode and a brief description...

Hunter and I working on setting up a painting...

One of my robots working on a portrait...

Elle asking some questions...

Cool shot of my paint covered hands...

One of my robots working on a portrait of Elle...

... and me walking Elle through some of the many algorithms, both borrowed and invented, that I use to get from a photograph of her to a finished stylized portrait below.

Robot Art 2017 - Top Technical Contributor

CloudPainter used deep learning, various open source AI, and some of our own custom algorithms to create 12 paintings for the 2017 Robot Art Contest. The robot and its software was awarded the Top Technical Contribution Award while the artwork it produced recieved 3rd place in the aesthetic competition. You can see the other winners and competitors at www.robotart.org.

Below are some of the portraits we submitted.

Portrait of Hank

Portrait of Corinne

Portrait of Hunter

We chose to go an abstract route in this year's competition by concentrating on computational abstraction. But not random abstraction. Each image began with a photoshoot, where CloudPainter's algorithms would then pick a favorite photo, create a balanced composition from it, and use Deep Learning to apply purposeful abstraction. The abstraction was not random but based on an attempt to learn from the abstraction of existing pieces of art whether it was from a famous piece, or from a painting by one of my children.

Full description of all the individual steps can be seen in the following video.

NVIDIA GTC 2017 Features CloudPainter's Deep Learning Portrait Algorithms

CloudPainter was recently featured in NVIDIA's GTC 2017 Keynote. As deep learning finds it way into more and more applications, this video highlight some of the more interesting applications. Our ten seconds comes around 100 seconds in, but I suggest watching the whole thing to see where the current state of the art in artificial intelligence stands.

Hello7Bot - A Tutorial to get 7Bot Moving

Couple of people have asked for how I got my 7Bots running. Writing this tutorial to demonstrate how I got their example code working. Will also be giving all the code I used to run my 7Bots in the Robot Art 2017 Contest. Hopefully this tutorial is helpful, but if it isn't, email me with any issues and I will try and respond as quickly as possible, maybe even immediately.

So here is quick list of steps in this tutorial:

1: Get your favorite PC or Mac.

2: Plug 7Bot into your computer.

3: Make sure Arduino Due is installed on 7Bot

4: Install Processing3 to run code.

5: Download 7Bot example code.

6: Open 7Bot example code in Processing3.

7: Make minor configuration adjustments.

8: Run the example code in Processing3.

9: Watch 7Bot come to life.

10: Experiment with my 7Bot code.

In more detail:

Step 1: Go on your favorite PC (Windows or Mac, maybe Linux?)

Simple Enough. Pretty sure it will also work on Linux, but I haven't tried it.

Step 2: Plug 7Bot into Computer and Electrical Socket

Use USB cable to plug 7Bot into your computer.

Also make sure 7Bot is plugged into external power source.

To see if it has power, hit the far left of the three buttons on the back of the robot. It should go to the default ready position as seen below.

Step 3: (OPTIONAL) If 7Bot is acting weird Make sure Drivers for the Arduino Due Are Installed

This step may or may not be needed and I am currently working to get to bottom of why this tutorial works for some 7Bots and not others. My 7Bots came with drivers for the Arduino Due pre-installed, though I have heard of of 7Bots where it sounds like they are not. If you think your 7Bot has already been set up you can proceed. If not try one of the two following strategies to figure out if 7Bot is ready to go..

1: Download the GUI at http://www.7bot.cc/download and try using it to get your 7Bot moving.

2: Download Getting_Started_with_Bot_v1.0.pdf and make sure Arduino Due drivers are installed from its instructions.

Step 4: Download Processing3

Processing3 is a java based development environment that is pretty straight forward if you are familiar with any of the major languages.

Download it here and install it.

Step 5: Download 7Bot Example Code

Go to github and clone their example code from the following repo.

If you are unfamiliar with github or just want the zip file, here take this.

Step 6: Open Example Code in Processing3

Go to the Arm7Bot_Com_test.pde example file you got earlier and open it.

It will ask you how to open the code, so specify the location of wherever you put the processing.exe file.

When it opens up in Processing3 it will look something like this.

Step 7: Make Minor Configuration Adjustments.

Early in the code you will find the following lines

// Open Serial Port: Your should change PORT_ID accoading to

// your own situation.

// Please refer to: https://www.processing.org/reference/libraries/serial/Serial.html

int PORT_ID = 3;

Change the PORT_ID to match the one you plugged 7Bot into. You can refer to the documentation shown, use trial and error, or find yours by adding the following line of code and running the program...

// List all the available serial ports:

printArray(Serial.list());

When I ran this, I saw the following...

[0] "/dev/cu.Bluetooth-Incoming-Port"

[1] "/dev/cu.Bluetooth-Modem"

[2] "/dev/cu.usbmodem1411"

[3] "/dev/tty.Bluetooth-Incoming-Port"

[4] "/dev/tty.Bluetooth-Modem"

[5] "/dev/tty.usbmodem1411"

And set the Port_ID to the first usb port, 2.

int PORT_ID = 2; //PINDAR - CHANGED FROM int PORT_ID = 3;

Step 8: Run the Code in Processing

Hit the Play Button in the top left hand corner of Processing3, and a small window should open and the robot should start moving.

Step 9: Watch 7Bot come to life.

Or not. Well it should start moving, but if it doesn't, first thing to try changing is the Port in Step 7. If that doesn't work contact me and I will try and work with you to get it running. Then I will update this blog so that next person doesn't have same problem you had.

Step 10: Have fun with my Robot Art 2017 Code

The example code from 7Bot is well documented and shows you how to do all sorts of cool things like recording the robots movements and then playing it back. I learned most of the code I used in the Robot Art 2017 contest by reverse engineering this example.

If you want to see my code, here is a version that has some of the extra functionality like easier to use inverse kinematics. But if you clone this repo, no judging my coding style. I like comments and leaving lots of them in as a history of what I was doing earlier in the process. Never know when I might need to reference them or revert. I know that is what version control is for, but I leave comments everywhere anyways. Hey. You promised you wouldn't judge!

Hope this tutorial works out for you. As mentioned earlier, write with any issues and I will try to clarify within 24 hours, maybe even immediately. 7Bot is awesome and I hope this tutorial helps you get it running.

Pindar

Elastic{ON} 17

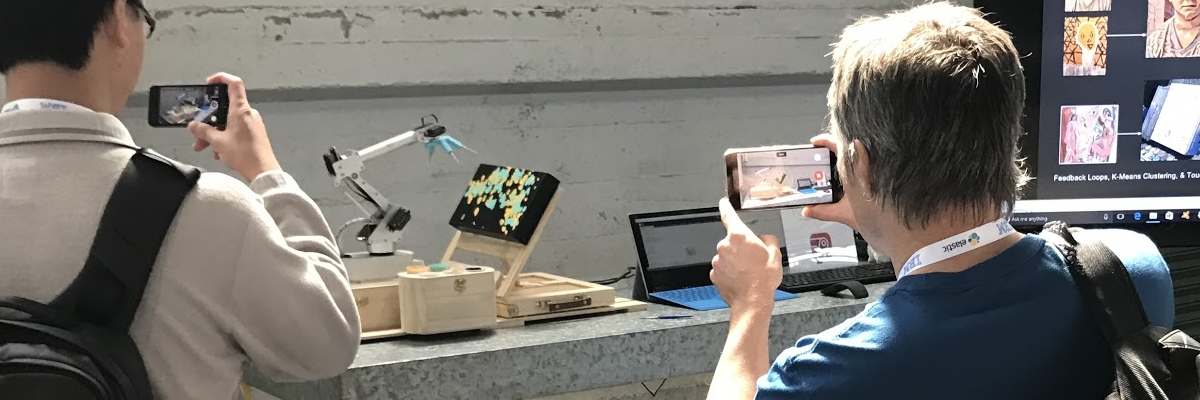

Just finished with a busy week at Elastic{ON} 17 where we had a great demo of our latest painting robot. One of the best things to come of these exhibitions is the interaction with the audience. We can get better sense of what works as part of the exhibit as well as what doesn't.

Our whole exhibition had two parts. The first was a live interactive demo where one of our robots was tracking a live elastic index of conference attendee's wireless connections and painting them in real time. The second was an exhibition of the cloudpainter project where Hunter and I are trying to teach robots to be creative.

A wall was set up at the conference where we hung 30 canvases. Each 20-30 minutes, a 7Bot robotic arm painted dots on a black canvas. The location of the dots were taken from the geolocation of 37 wireless access points within the building.

There are lots of ways to measure the success of an exhibit like this. The main reason we think that it got across to people, though, was the shear amount of pictures and posts to social media that was occurring. There was a constant stream of interested attendees and questions.

Also, the exhibition's sponsors and conference organizers appeared to be pleased with the final results as well as all the attention the project was getting. At the end of two days, approximately 6,000 dots had been painted on the 30 canvases..

Personal highlight for me was fact that Hunter was able to join me in San Francisco. We had lots of fun at conference and were super excited to be brought on stage during the conference's closing Q&A with the Elastic Founders.

Will leave you with a pic of Hunter signing canvases for some of our elastic colleagues.

cloudpainter AI described in a Single Graphic

I always struggle to describe all the AI behind cloudpainter. It is all over the place. The following graphic that I put together for my Elastic{ON} 17 Demo sums it up pretty well. While it doesn't include all the algorithms, it features many of my favorite ones.

TensorFlow Dev Summit 2017 cont...

Matt and I had a long day listening to some of the latest breakthroughs in deep learning, specifically those related to TensorFlow. Some standouts included a Stanford student that had created a neural net to detect skin cancer. Also liked Doug Eck's talk about artificial creativity. Jeff Dean had a cool keynote, and got to learn about TensorBoard from Dandelion Mane. One of my favorite parts of the summit was getting shout outs and later talking to both Jeff Dean and Doug Eck. The shoutouts to cloudpainter during Jeff's Keynote and Eck Session and lots of pics can be seen below. This is mostly for my memories.

cloudpainter at Elastic{ON} 17

Less than half an hour ago I wrote about how I am on my way the first annual TensorFlow Dev Summit at Google HQ. There is more. While in Mountain View I will also be stopping by elastic HQ to discuss an upcoming booth that cloudpainter has been invited to have at Elastic{ON} 2017.

For the booth I have prepped 5 recreations of masterpieces as well as a new portrait of Hunter based on many of my traditional AI applications. Cool thing about this data set is that I have systematically recorded every brush stroke that have gone into the masterpieces and stored them in an elasticsearch database.

Why? I don't know. Everything is data - even art. And I am trying to reverse engineer the genius of artists such as da Vinci, Van Gogh, Monet, Munch, and Picasso. I have no idea what it will tell us about their art work, or how it will help us decipher the artistry. I am just putting the data out there for the data science community to help me figure it out. The datasets of each an every stroke will be revealed during Elastic{ON} on March 7th. Until then here is a sneak peak at the paintings my robots made.