Channelling Picasso and Braque

My latest robotic painting commission (seen above) began with a question and a challenge.

I was sent a couple portraits and several cubist works and asked

“whether style transfer works better or whether there are other ways of training the algorithm to repaint a picture analytically in a similar way that Picasso and Braque did when they started (i.e. dissecting a picture into basic geometrical shapes, getting more abstract with every round, etc.).”

It seemed obvious to me that the analytical approach would be better, if only for the reason that that was what the original artists had done. But I wasn’t sure and experimenting with this question sounded like a wonderful idea, so I dived into the portrait. I began by first using style transfer to make a grid of portraits as reimagined by neural networks.

The results were as could be expected, but regardless I always find it amazing how well cubist work lends itself to Style Transfer. This process works for some art styles and completely fails on others, but I have always found that it is particularly well suited for cubism.

With several style transfers completed, I began experimenting with a more analytical approach. The first process I began was to use hough lines to attempt to find lines and therefore shapes from both the original portraits and the neural network imagined ones.

The image above shows many of the attempts to find and define line patterns in both the original portraits and the cubist style transfers. I was expecting better results, but the information was at least useful…

It showed me which of the original six cubist style transfers had the most defined shapes and lines (right). It also gave my robots a frame of reference for how to paint their strokes when the time would come.

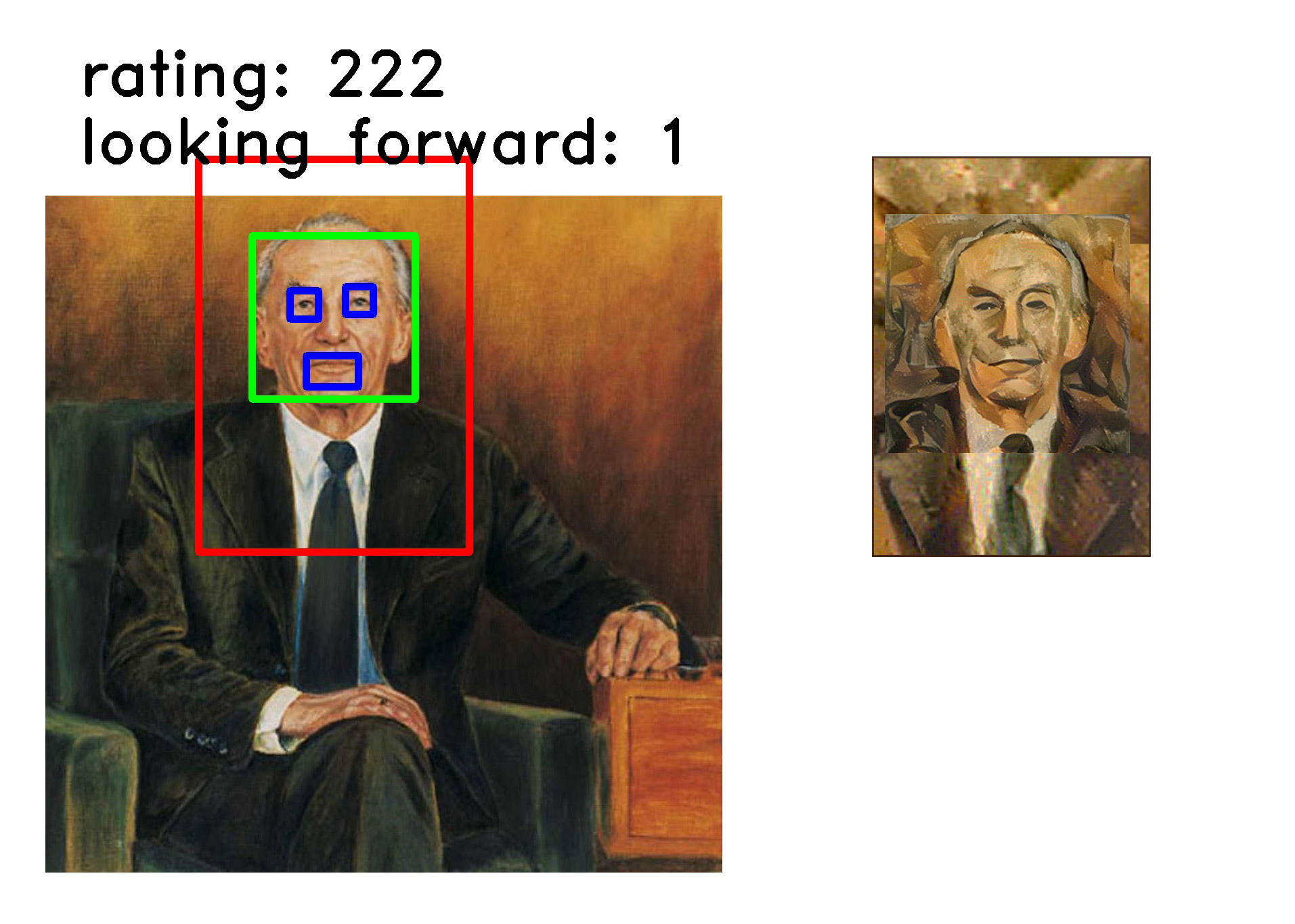

I was a little stumped though. I had expected better results, and my plan was to use those results to determine the path forward. I thought I could perhaps find shapes in the images, and then use the shapes to draw other shapes to create abstract portraits. But those shapes did not materialize from my algorithms. Frustrated and wondering where to go next, I put the images through many of my traditional AI algorithms including the viola-jones facial detection algorithm and some of my own beauty measuring processes.

My robots gathered and and studied hundreds of images using a variety of criteria. In the end it settled on the following composition and style. The major deciding factor for going with this composition was that it found it to be the most aesthetically pleasing of every variation it tested. The reason it picked the style was that it was the style that produced the richest set of analytical data. For example, the hough lines performed in the previous processes revealed more lines and shapes in this particular Picasso than any of the other style transfers. I expected that this would be useful later on in the process.

At this point I wanted to go forward but was still unsure of how to proceed. Fortunately artists have a time honored trick when they do not know what to do next. We just start painting. So I loaded all the models into one of my large format robots and set it to work. I figured the answer to what to do next would emerge as the painting was being made.

As the robot painted for a about a day, it did not make many aesthetic decisions. It just painted the general texture and shading of the portrait taking direction from the hough lines. As I was waiting for this sketch to finish, a second suggestion came from the commissioner of the portrait. I was asked:

“What if we tried to run a standard photo morphing process from one of the faces of the classical cubist work and see whether an interim-stage picture of this process could be a basis for the AI to work from?”

I had never done this before and it was an interesting thought so I gave it a shot. I found the results intriguing. Furthermore, it was fun to see the exact point in the morphing process at which the likeness disappears, and the face becomes a Braque painting.

Another interesting aspect of creating the morphing animation was that I was able to compare it to an animation of how the neural networks applied style transfer. For the comparison, both animation can be seen to the left.

Interesting comparison aside, I had a path forward. I showed my robots how to use the morphed image. For the second stage of the painting they worked towards completing the portrait in the style of a Braque.

I was really liking the results, however, there was one major problem. The portrait was too abstract. It no longer resembled the subject of the portrait. It had lost the likeness, and the one thing that every portrait should do is resemble the subject, at least a little. This portrait didn’t and I realized it needed another round to bring the likeness back.

To accomplish this I had the robot use both another round of style transfer and morphing to return to something that better resembled the original portrait. The robot had gone too abstract, so I was telling it to be more representational.

While the style transfer at this stage was automatic, the morphing required manual input from me. I had to define the edges of the various features in both the source abstract image and the style image. I tried to automate this step, but none of my algorithms could make enough sense of the abstract image. It didn’t know how to define things such as the background or location of the eyes. I will work on better approaches in the future, but for now I do not find it surprising that this is a weakness of my robot’s computer vision.

The final painting was completed over the course of a week by my largest robot. Below is a timelapse video of its completion…

The description I just gave about how my robots produced this has been an abbreviated outline of my entire generative AI art system. Several other algorithms and computer vision systems were used though I really only concentrated on describing the back and forth between Style Transfer and Morphing. The robot began by using neural networks to produce something in the style of a cubist Picasso, then morphed the image to imitate a Braque. Once things had gone too abstract, it finished by using both techniques to return to a more representational portrait. Back and forth and back again.

After the robot had completed the 12,382 strokes seen in the video, I touched it up by hand for a couple of minutes before applying a clear varnish. It is always relieving that while my robots can easily complete 99.9% of the thousands of strokes it applies, I am still needed for the final 0.1%. My job as an artists remains safe for now.

Pindar Van Arman

cloudpainter.com