A Deeper Learning Analysis of The Scream

Am a big fan of what The Google Brain Team, specifically scientists Vincent Dumoulin, Jonathon Shlens, and Majunath Kudlar, have accomplished with Style Transfer. In short they have developed a way to take any photo and paint it in the style of a famous painting. The results are remarkable as can be seen in the following grid of original photos painted in the style of historical masterpieces.

However, as can be seen in the following pastiches of Munch's The Scream, there are a couple of systematic failures with the approach. The Deep Learning algorithm struggles to capture the flow of the brushstrokes or "match a human level understanding of painting abstraction." Notice how the only thing truly transferred is color and texture.

Seeing this limitation, I am currently attempting to improve upon Google's work by modeling both the brushstrokes and abstraction. In the same way that the color and texture is being successfully transferred, I want the actual brushstrokes and abstractions to resemble the original artwork.

So how would this be possible? While I am not sure how to achieve artistic abstraction, modeling the brushstrokes is definitely doable. So lets start there.

To model brushstrokes, Deep Learning would need brushstroke data, lots of brushstroke data. Simply put, Deep Learning needs accurate data to work. In the case of the Google's successful pastiches (an image made in style of an artwork), the data was found in the image of the masterpieces themselves. Deep Neural Nets would examine and re-examine the famous paintings on a micro and macro level to build a model that can be used to convert a provided photo into the painting's style. As mentioned previously, this works great for color and texture, but fails with the brushstrokes because it doesn't really have any data on how the artist applied the paint. While strokes can be seen on the canvas, there isn't a mapping of brushstrokes that could be studied and understood by the Deep Learning algorithms.

As I pondered this limitation, I realized that I had this exact data, and lots of it. I have been recording detailed brushstroke data for almost a decade. For many of my paintings each and every brushstroke has been recorded in a variety of formats including time-lapse videos, stroke maps, and most importantly, a massive database of the actual geometric paths. And even better, many of the brushstrokes were crowd sourced from internet users around the world - where thousands of people took control of my robots to apply millions of brushstrokes to hundreds of paintings. In short, I have all the data behind each of these strokes, all just waiting to be analyzed and modeled with Deep Learning.

This was when I looked at the systematic failures of pastiches made from Edvard Munch's The Scream's, and realized that I could capture Munch's brushstrokes and as a result make a better pastiche. The approach to achieve this is pretty straight forward, though labor intensive.

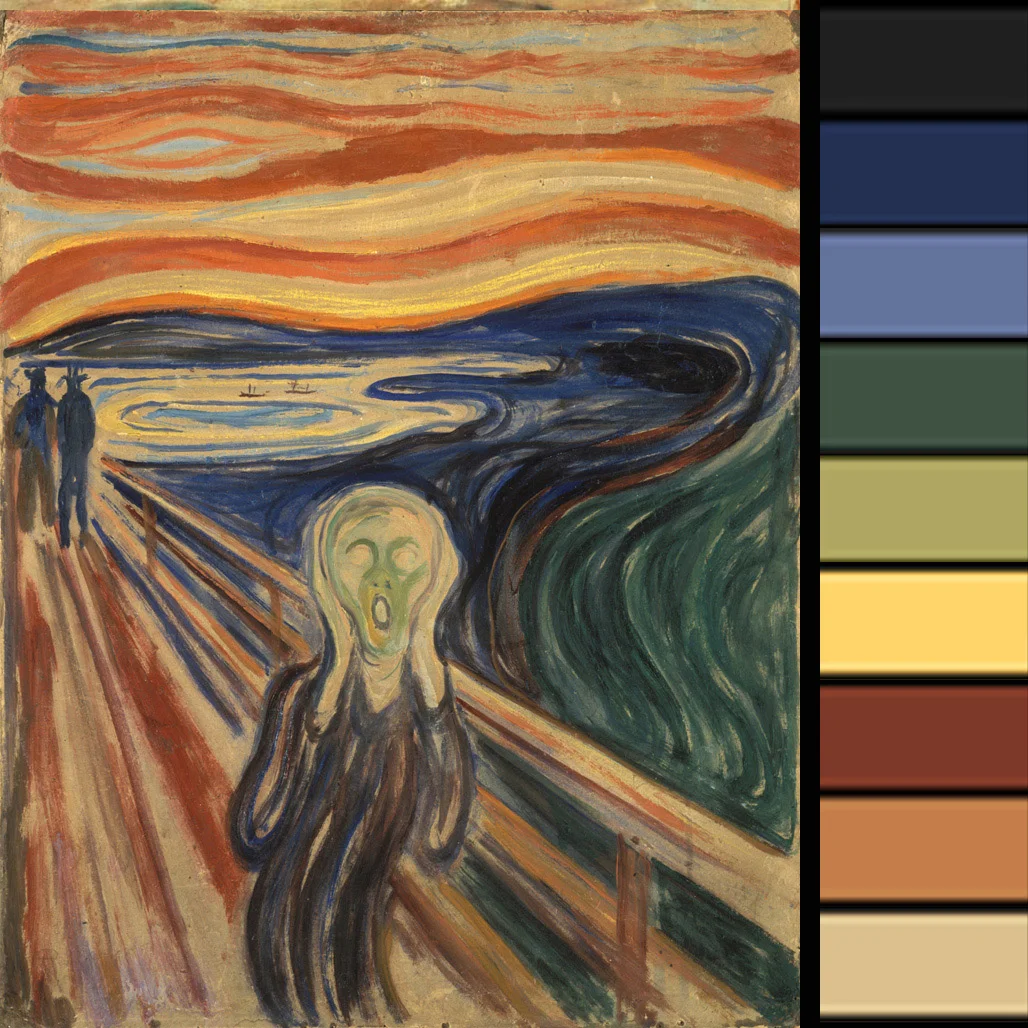

This process all begins with the image and a palette. I have no idea what Munch's original palette was, but the following is an approximate representation made by running his painting through k-means clustering and some of my own deduction.

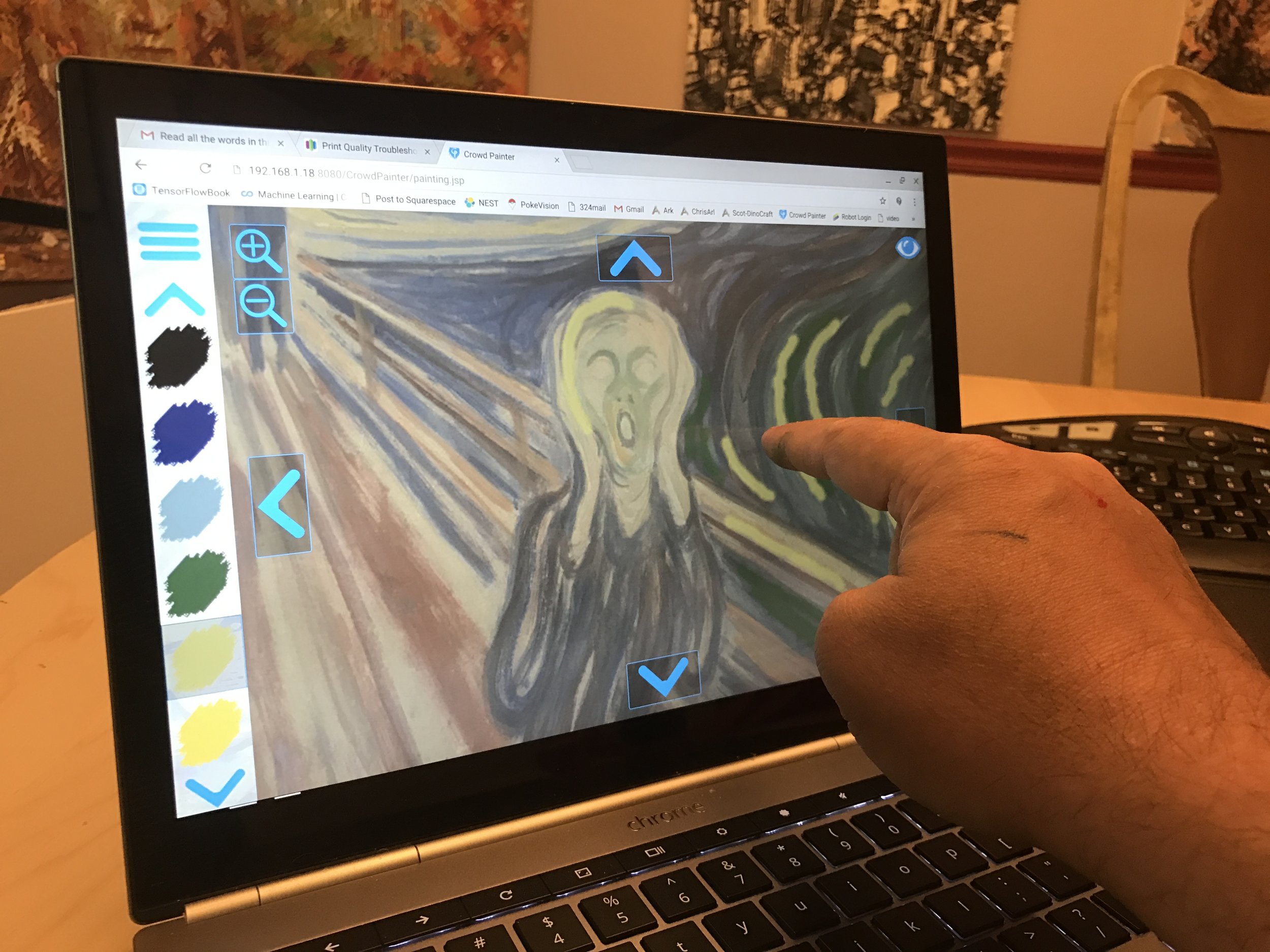

With the painting and palette in hand, I then set cloudpainter up to paint in manual mode. To paint a replica, all I did was trace brushstrokes over the image on a touch screen display. The challenging part is painting the brushstrokes in the manner and order that I think Edvard Munch may have done them. It is sort of an historical reenactment.

As I paint with my finger, these strokes are executed by the robot.

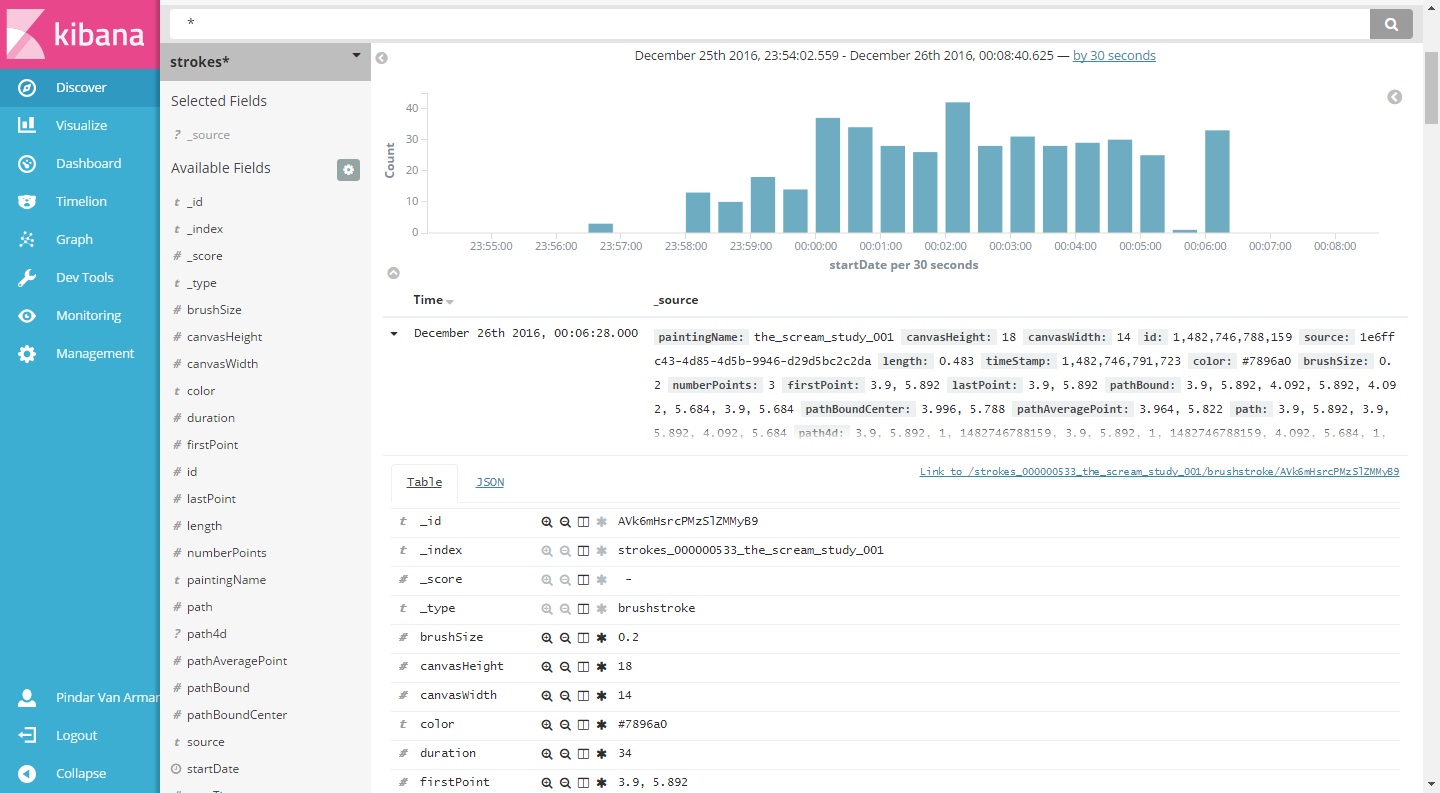

More importantly, each brushstroke is saved in an Elasticsearch database with detailed information on its exact geometry and color.

At the conclusion of this replica, detailed data exists for each and every brushstroke to the thousandth of an inch. This data can then be used as the basis for an even deeper Deep Learning analysis of Edvard Munch's The Scream. An analysis beyond color and texture, where his actual brushstrokes are modeled and understood.

So this brings us to whether or not abstraction can be captured. And while I am not sure that it can, I think I have an approach that will work at least some of the time. To this end, I will be adding a second set of data that labels the context of The Scream. This will include geometric bounds around the various areas of the painting and be used to consider the subject matter in the image. So while The Google Brain Team used only an image of the painting for its pastiches, the process that I am trying to perfect will consider the the original artwork, the brushstrokes, and how brushstroke was applied to different parts of painting.

Ultimately it is believed that by considering all three of these data points, a pastiche made from The Scream will more accurately replicate the style of Edvard Munch.

So yes, these are lofty goals and I am getting ahead of myself. First I need to collect as much brushstroke data as possible and I leave you now to return to that pursuit.

Lost in Abstraction - Style Transfer and the State of the Art in Generative Imaging

Seeing lots of really cool filters on my friend's photos recently, especially from people using the prisma app. Below is an example of such a photo and one of the favorites that I have seen.

The filters being applied to these photos are obviously a lot more than adjusting levels and contrast, but what exactly are they? While I can not say for sure what prisma is using, a recently released research paper by Google scientists gives a lot of insight into what may be happening.

The paper, titled A Learned Representation for Artistic Style and written by Vincent Dumoulin, Jonatha Shlens, and Manjunath Kudlar of Google Brain, details the work of multiple research teams in the quest to achieve the perfect pastiche. No worries if you don't know what a pastiche is, I didn't either until I read the paper. Well I knew what one was, I just didn't know what they were called. So a pastiche is an image where an artist tries to represent something in the style of another artist. Here are several examples that the researchers provide.

In the above image you can see how the researchers have attempted to render three photographs in the style of Lichtenstein, Rouaul, Monet, Munch, and Van Gogh. The effects are pretty dramatic. Each pastiche looks remarkably like both source images. One of the coolest things about the research paper is that it contains the detailed replicate-able process so that you too can create your own pastiche producing software. While photo editing apps like prisma seam to be doing a little more than just a single pastiche, my gut tells me that this process or something similar is behind much of what they are doing and how they are doing it so well.

So looking at the artificial creativity behind these pastiches, I like to ponder the bigger question. How close are we to a digital artist? I always ask this 'cause that is what I am trying to create.

Well, as cool and cutting edge as these pastiches are, they are still just filters of photos. And even though this is the state of the art coming out of Google Brain, they are not even true pastiches yet. While they do a good job of transferring color and texture, they don't really capture the style of the artist. You wouldn't look at any of the pastiches in the second column above and think that Lichtenstein actually did them. They share color, contrast, and texture, but thats about it. Or look more closely at these pastiches made from Edvard Munch's The Scream (top left).

While the colors and textures of the imitative works are spot on, the Golden Gate Bridge looks nothing like the abstracted bridge in The Scream. Furthermore, the two portraits have none of the distortion found in the face of the central figure of the painting. These will only be true pastiches when these abstract elements are captured alongside the color, texture, and contrast. The style and process behind producing these pastiches seam to be getting lost in the abstraction layer.

How do we imitate abstraction. No one knows yet, but there are a lot of us working on the problem and as of November 1, 2016 this is some of the best work being done on the problem.