Artonomo.us - beginnings of a completely autonomous painting robot.

Artonomo.us is my latest painting robot project.

In this project, one of my robots

1: Selects it’s own imagery to paint.

2: Applies it’s own style with Deep Learning Neural Networks.

3: Then paints with Feedback Loops.

An animated GIF of the process is below…

Artonomo.us is currently working on a series of sixteen portraits in the style above. The portraits were selected from user submitted photos. It will be painting these portraits over the next couple weeks. When done it will select a new style and sixteen new user submitted portraits. If you would like to see more of these portraits, or submit yours for consideration, visit artonomo.us.

Below are the images selected for this round of portrait. We will publish the complete set when Artonomo.us is finished with it…

Pindar

New Art Algorithm Discovered by autonymo.us

Have started a new project called autonymo.us where I have let one of my painting robots go off on its own. It experiments and tries new things, sometimes completely abstract, other times using a simple algorithm to complete a painting. Sometimes it uses different combinations of the couple dozens I have written for it.

Most of the results are horrendous. But sometimes it comes up with something really beautiful. I am putting the beautiful ones up at the website autonymo.us. But also thought I would share new algorithms discoveries here.

So the second algorithm we discovered looks really good on smiling faces. And it is really simple.

Step 1: Detect that subject has a big smile. Seriously cause this doesn’t look good otherwise.

Step 2: Isolate background and separate it from the faces.

Step 3: Quickly cover background with a mixture of teal & white paint.

Step 4: Use K-Means Clustering to organize pixels with respect to r, g, b, values and x, y coordinates.

Step 5: Paint light parts of painting in varying shades of pyrole orange.

Step 6: Paint dark parts of painting in crimsons, grays, and black.

A simple and fun algorithm to paint smiling portraits with. Here are a couple…

Emerging Faces - A Collaboration with 3D (aka Robert Del Naja of Massive Attack)

Have been working on and off for past several months with Bristol based artist 3D (aka Robert Del Naja, the founder of Massive Attack). We have been experimenting with applying GANs, CNNs, and many of my own artificial intelligent algorithms to his artwork. I have long been working at encapsulating my own artistic process in code. 3D and I are now exploring if we can capture parts of his artistic process.

It all started simply enough with looking at the patterns behind his images. We started creating mash-ups by using CNNs and Style Transfer to combine the textures and colors of his paintings with one another. It was interesting to see what worked and what didn't and to figure out what about each painting's imagery became dominant as they were combined

As cool as these looked, we were both left underwhelmed by the symbolic and emotional aspects of the mash-ups. We felt the art needed to be meaningful. All that was really be combined was color and texture, not symbolism or context. So we thought about it some more and 3D came up with the idea of trying to use the CNNs to paint portraits of historical figures that made significant contributions to printmaking. Couple of people came to mind as we bounced ideas back and forth before 3D suggested Martin Luther. At first I thought he was talking about Martin Luther King Jr, which left me confused. But then when I realized he was talking about the the author of The 95 Theses and it made more sense. Not sure if 3D realized I was confused, but I think I played it off well and he didn't suspect anything. We tried applying CNNs to Martin Luther's famous portrait and got the following results.

It was nothing all that great, but I made a couple of paintings from it to test things. Also tried to have my robots paint a couple of other new media figures like Mark Zuckerberg.

Things still were not gelling though. Good paintings, but nothing great. Then 3D and I decided to try some different approaches.

I showed him some GANs where I was working on making my robots imagine faces. Showed him how a really neat part of the GAN occurred right at the beginning when faces emerge from nothing. I also showed him a 5x5 grid of faces that I have come to recognize as a common visualization when implementing GANs in tutorials. We got to talking about how as a polyptych, it recalled a common Warhol trope except that there was something different. Warhol was all about mass produced art and how cool repeated images looked next to one another. But these images were even cooler, because it was a new kind of mass production. They were mass produced imagery made from neural networks where each image was unique.

I started having my GANs generate tens of thousands of faces. But I didn't want the faces in too much detail. I like how they looked before they resolved into clear images. It reminded me of how my own imagination worked when I tried to picture things in my mind. It is foggy and non descript. From there I tested several of 3D's paintings to see which would best render the imagined faces.

3D's Beirut (Column 2) was the most interesting, so I chose that one and put it and the GANs into the process that I have been developing over the past fifteen years. A simplified outline of the artificially creative process it became can be seen in the graphic below.

My robots would begin by having the GAN imagine faces. Then I ran the Viola-Jones face detection algorithm on the GAN images until it detected a face. At that point, right when the general outlines of faces emerged, I stopped the GAN. Then I applied a CNN Style Transfer on the nondescript faces to render them in the style of 3D's Beirut. Then my robots started painting. The brushstroke geometry was taken out of my historic database that contains the strokes of thousands of paintings, including Picassos, Van Goghs, and my own work. Feedback loops refined the image as the robot tried to paint the faces on 11"x14" canvases. All told, dozens of AI algorithms, multiple deep learning neural networks, and feedback loops at all levels started pumping out face after face after face.

Thirty-two original faces later it arrived at the following polyptych which I am calling the First Sparks of Artificial Creativity. The series itself is something I have begun to refer to as Emerging Faces. I have already made an additional eighteen based on a style transfer of my own paintings, and plan to make many more.

Above is the piece in its entirety as well as an animation of it working on an additional face at an installation in Berlin. You can also see a comparison of 3D's Beirut to some of the faces. An interesting aspect of the artwork, is that despite how transformative the faces are from the original painting, the artistic DNA of the original is maintained with those seemingly random red highlights.

It has been a fascinating collaboration to date. Looking forward to working with 3D to further develop many of the ideas we have discussed. Though this explanation may appear to express a lot of of artificial creativity, it only goes into his art on a very shallow level. We are always talking and wondering about how much deeper we can actually go.

Pindar

Simon Colton and The Painting Fool

The Painting Fool - Mood: reflective. Desired quality: simple.

Avoiding Uncreative Behavior

Was excited to recently make contact with Simon Colton, the artist and developer behind The Painting Fool. After brief twitter chat with him, heard about his thoughts on the criteria that made things "uncreative". If I understood him correctly, it is not so much that he is trying to make software creative, but that he is trying to avoid things that could be thought of as "uncreative", such as random number generation.

He directed me to one of his articles that went into details on this. What I read was really interesting.

The work begins with a rather elegant definition of Computational Creativity that I agree with.

Computational Creativity: The philosophy, science and engineering of computational systems which, by taking on particular responsibilities, exhibit behaviours that unbiased observers would deem to be creative.

There are many interesting thoughts throughout rest of paper, but the two concepts that I found most relevant were that

1) artificially creative systems should avoid randomness

and

2) they should attempt to frame, or give context to, what they are creating.

He begins by criticizing the over reliance on random number generation in computationally creative systems. Using the example of poetry, Colton writes that software could use random number generation to create a poem with "exactly the same letters in exactly the same order as one penned by a person." But despite fact that both works are identical and read identically, the poem created with random numbers is meaningless by comparison to the poem written by a person.

Why?

Well there are lots of reasons, but Colton elaborates on this to emphasize the importance of framing the artwork, where

"Framing is a term borrowed from the visual arts, referring not just to the physical framing of a picture to best present it, but also giving the piece a title, writing wall text, penning essays and generally discussing the piece in a context designed to increase its value."

In more detail he goes on to say...

"We advocate a development path that should be followed when building creative software: (i) the software is given the ability to provide additional, meta-level, information about its process and output, e.g., giving a painting or poem a title (ii) the software is given the ability to write commentaries about its process and its products (iii) the software is given the ability to write stories – which may involve fictions – about its processes and products, and (iv) the software is given the ability to engage in dialogues with people about what it has produced, how and why. This mirrors, to some extent, Turing’s original proposal for an intelligence test."

This view is really interesting to me.

In my own attempts at artificial creativity, I have always tried to follow both of these ideas. I avoid relying on random number generation to achieve unexpected results. And even though this has long been my instinct, I have never been able to articulate the reason why as well as Colton does in this writing. Given the importance of a creative agent to provide a frame for why and how each creative decision was made, random number generation is a meaningless reason to do something, which in effect takes away from the meaning of a creation.

Imagine being struck by the emotional quality of a color palette in an artwork then asking the artist why they chose that particular color palette. If the artist's response was, "It was simple really. I just rolled a bunch of dice and let them decide on which color I painted next." The emotional quality of the color palette would evaporate leaving us feeling empty and cheated that we were emotionally moved by randomly generated noise.

With this reading in mind and the many works of Simon Colton and The Painting Fool, I will continue to try and be as transparent with the decision making process of my creative robots as possible. Furthermore, while I do try to visually frame their decision making processes with timelapses of each painting from start to finish, I am now going to look at ways to verbally frame them. Will be challenging, but it is probably needed.

If you want to see more of Simon Colton and The Painting Fool's work check out the You Can’t Know my Mind exhibition from 2013.

cloudpainter AI described in a Single Graphic

I always struggle to describe all the AI behind cloudpainter. It is all over the place. The following graphic that I put together for my Elastic{ON} 17 Demo sums it up pretty well. While it doesn't include all the algorithms, it features many of my favorite ones.

Our First Truly Abstract Painting

Have had lots of success with Style Transfer recently. With the addition of Style Transfer to some of our other artificially creative algorithms, I am wondering if cloudpainter has finally produced something that I feel comfortable calling a true abstract painting. It is a portrait of Hunter.

In one sense, abstract art if easy for a computer. A program can just generate random marks and call the finished product abstract. But that's not really an abstraction of an actual image, its the random generation of shapes and colors. I am after true abstraction and with Style Transfer, this might just be possible.

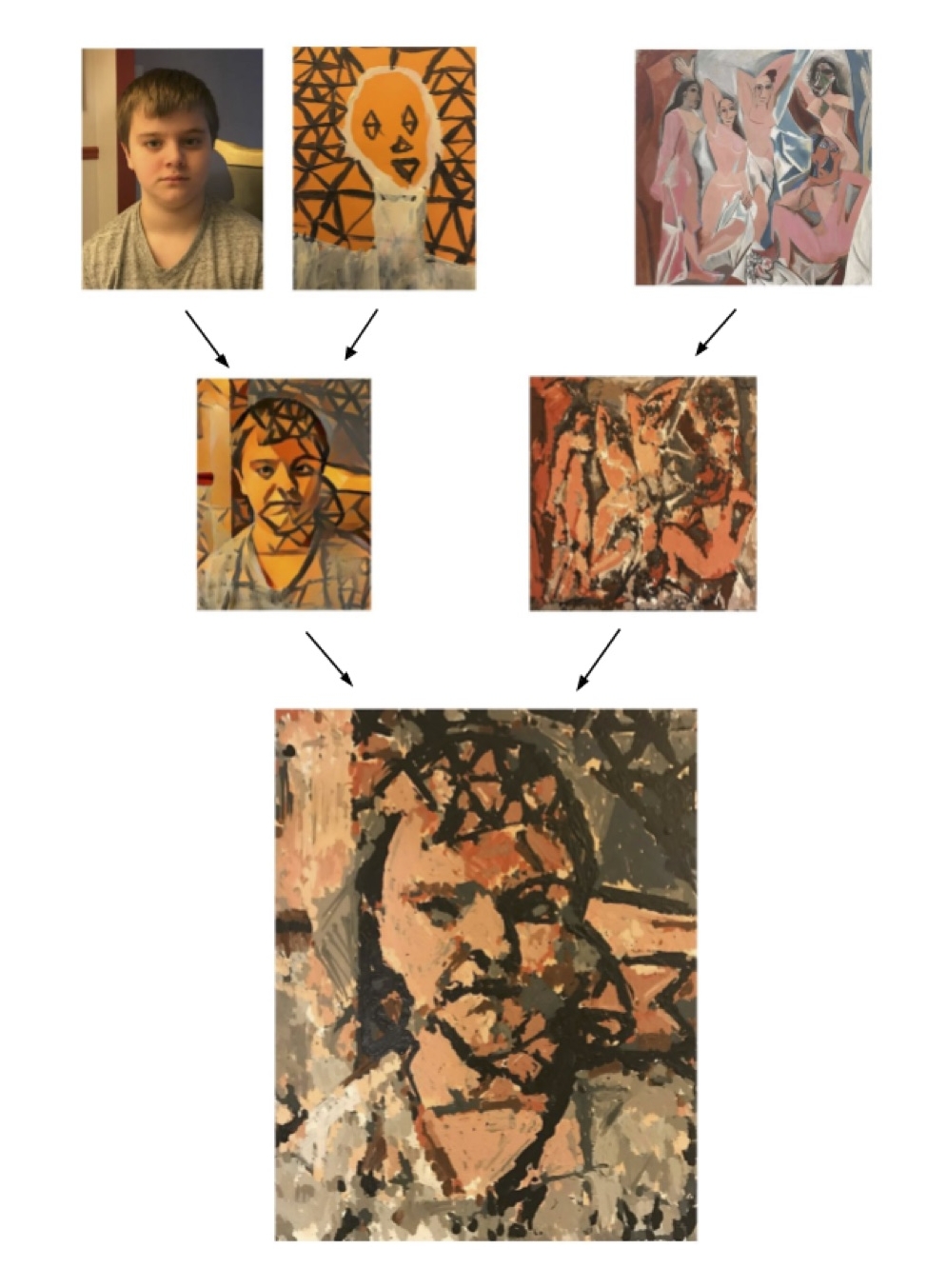

More details to come as we refine the process, but in short the image above was created from three source images, seen in the top row below, and image of Hunter, his painting, and Picasso's Les Demoiselles d Avignon.

Style Transfer was applied to the photo of Hunter to produce the first image in the second row. The algorithm tried to paint the photo in the style of Hunter's painting. The second image in the second row is a reproduction of Picasso's painting made and recorded by one of my robots using many of its traditional algorithms and brush strokes by me.

The final painting in the final row was created by cloudpainter's attempt to paint the Style Transfer Image with the brush strokes and colors of the Picasso reproduction.

While this appears like just another pre-determined algorithm that lacks true creativity, the creation of paintings by human artists follow a remarkably similar process. They draw upon multiple sources of inspiration to create new imagery.

The further along we get with our painting robot, I am not sure if we are less creative than we think, or computers are much more so than we imagined.

Stroke Maps, TensorFlow, and Deep Learning

Just completed recreations of The Scream and The Mona Lisa. These are not meant to be accurate reproductions of the paintings, but an attempt at recreating how the artists painted each stroke. The Idea being that once these strokes are mapped, TensorFlow and Deep Learning can use the data to make better pastiches.

Full Visibility's Machine Learning Sponsorship

Wanted to take a moment to publicly thank cloudpainter's most recent sponsor, Full Visibility.

Full Visibility is a Washington D.C. based software consulting boutique that I have been lucky enough to become closely associated with. Their sponsorship arose from a conversation I had with one of their partners. Was telling him how I finally thought that Machine Learning, which has long been an annoying buzzword, was finally showing evidence of being mature. Next thing I knew Full Visibility bought a pair of mini-supercomputers for the partner and I to experiment with. One of the two boxes can be seen in the picture of my home based lab below. It's the box with the cool white skull on it. While nothing too fancy, it has about 2,500 more cores than any other machine I have ever been fortunate enough to work with. The fact that private individuals such as myself can now run ML labs in their own homes, might be the biggest indicator that a massive change is on the horizon.

Full Visibility joins the growing list of cloudpainter sponsors which now includes Google, 7Bot, RobotArt.org, 50+ Kickstarter Backers, and hundreds of painting patrons. I am always grateful for any help with this project that I can get from industry and individuals. All these fancy machines are expensive, and I couldn't do it without your help.

Pindar Van Arman