Hello7Bot - A Tutorial to get 7Bot Moving

Couple of people have asked for how I got my 7Bots running. Writing this tutorial to demonstrate how I got their example code working. Will also be giving all the code I used to run my 7Bots in the Robot Art 2017 Contest. Hopefully this tutorial is helpful, but if it isn't, email me with any issues and I will try and respond as quickly as possible, maybe even immediately.

So here is quick list of steps in this tutorial:

1: Get your favorite PC or Mac.

2: Plug 7Bot into your computer.

3: Make sure Arduino Due is installed on 7Bot

4: Install Processing3 to run code.

5: Download 7Bot example code.

6: Open 7Bot example code in Processing3.

7: Make minor configuration adjustments.

8: Run the example code in Processing3.

9: Watch 7Bot come to life.

10: Experiment with my 7Bot code.

In more detail:

Step 1: Go on your favorite PC (Windows or Mac, maybe Linux?)

Simple Enough. Pretty sure it will also work on Linux, but I haven't tried it.

Step 2: Plug 7Bot into Computer and Electrical Socket

Use USB cable to plug 7Bot into your computer.

Also make sure 7Bot is plugged into external power source.

To see if it has power, hit the far left of the three buttons on the back of the robot. It should go to the default ready position as seen below.

Step 3: (OPTIONAL) If 7Bot is acting weird Make sure Drivers for the Arduino Due Are Installed

This step may or may not be needed and I am currently working to get to bottom of why this tutorial works for some 7Bots and not others. My 7Bots came with drivers for the Arduino Due pre-installed, though I have heard of of 7Bots where it sounds like they are not. If you think your 7Bot has already been set up you can proceed. If not try one of the two following strategies to figure out if 7Bot is ready to go..

1: Download the GUI at http://www.7bot.cc/download and try using it to get your 7Bot moving.

2: Download Getting_Started_with_Bot_v1.0.pdf and make sure Arduino Due drivers are installed from its instructions.

Step 4: Download Processing3

Processing3 is a java based development environment that is pretty straight forward if you are familiar with any of the major languages.

Download it here and install it.

Step 5: Download 7Bot Example Code

Go to github and clone their example code from the following repo.

If you are unfamiliar with github or just want the zip file, here take this.

Step 6: Open Example Code in Processing3

Go to the Arm7Bot_Com_test.pde example file you got earlier and open it.

It will ask you how to open the code, so specify the location of wherever you put the processing.exe file.

When it opens up in Processing3 it will look something like this.

Step 7: Make Minor Configuration Adjustments.

Early in the code you will find the following lines

// Open Serial Port: Your should change PORT_ID accoading to

// your own situation.

// Please refer to: https://www.processing.org/reference/libraries/serial/Serial.html

int PORT_ID = 3;

Change the PORT_ID to match the one you plugged 7Bot into. You can refer to the documentation shown, use trial and error, or find yours by adding the following line of code and running the program...

// List all the available serial ports:

printArray(Serial.list());

When I ran this, I saw the following...

[0] "/dev/cu.Bluetooth-Incoming-Port"

[1] "/dev/cu.Bluetooth-Modem"

[2] "/dev/cu.usbmodem1411"

[3] "/dev/tty.Bluetooth-Incoming-Port"

[4] "/dev/tty.Bluetooth-Modem"

[5] "/dev/tty.usbmodem1411"

And set the Port_ID to the first usb port, 2.

int PORT_ID = 2; //PINDAR - CHANGED FROM int PORT_ID = 3;

Step 8: Run the Code in Processing

Hit the Play Button in the top left hand corner of Processing3, and a small window should open and the robot should start moving.

Step 9: Watch 7Bot come to life.

Or not. Well it should start moving, but if it doesn't, first thing to try changing is the Port in Step 7. If that doesn't work contact me and I will try and work with you to get it running. Then I will update this blog so that next person doesn't have same problem you had.

Step 10: Have fun with my Robot Art 2017 Code

The example code from 7Bot is well documented and shows you how to do all sorts of cool things like recording the robots movements and then playing it back. I learned most of the code I used in the Robot Art 2017 contest by reverse engineering this example.

If you want to see my code, here is a version that has some of the extra functionality like easier to use inverse kinematics. But if you clone this repo, no judging my coding style. I like comments and leaving lots of them in as a history of what I was doing earlier in the process. Never know when I might need to reference them or revert. I know that is what version control is for, but I leave comments everywhere anyways. Hey. You promised you wouldn't judge!

Hope this tutorial works out for you. As mentioned earlier, write with any issues and I will try to clarify within 24 hours, maybe even immediately. 7Bot is awesome and I hope this tutorial helps you get it running.

Pindar

Elastic{ON} 17

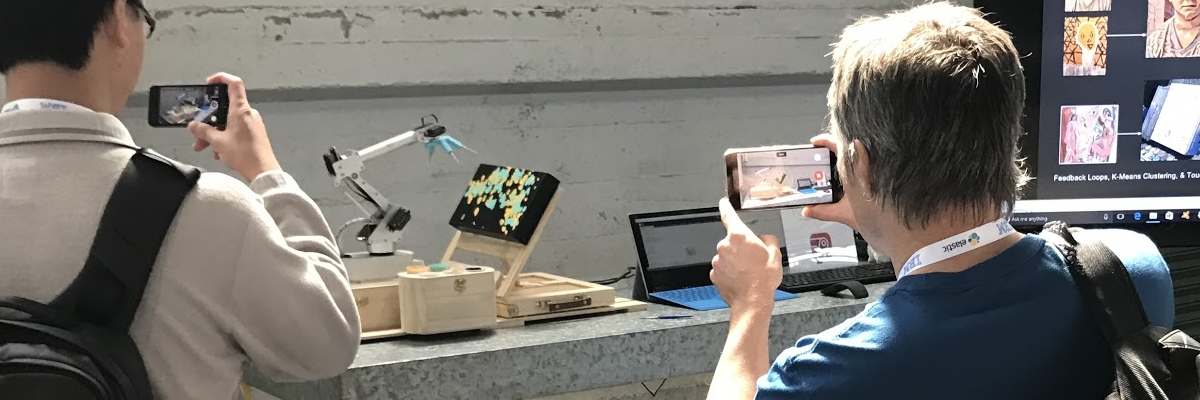

Just finished with a busy week at Elastic{ON} 17 where we had a great demo of our latest painting robot. One of the best things to come of these exhibitions is the interaction with the audience. We can get better sense of what works as part of the exhibit as well as what doesn't.

Our whole exhibition had two parts. The first was a live interactive demo where one of our robots was tracking a live elastic index of conference attendee's wireless connections and painting them in real time. The second was an exhibition of the cloudpainter project where Hunter and I are trying to teach robots to be creative.

A wall was set up at the conference where we hung 30 canvases. Each 20-30 minutes, a 7Bot robotic arm painted dots on a black canvas. The location of the dots were taken from the geolocation of 37 wireless access points within the building.

There are lots of ways to measure the success of an exhibit like this. The main reason we think that it got across to people, though, was the shear amount of pictures and posts to social media that was occurring. There was a constant stream of interested attendees and questions.

Also, the exhibition's sponsors and conference organizers appeared to be pleased with the final results as well as all the attention the project was getting. At the end of two days, approximately 6,000 dots had been painted on the 30 canvases..

Personal highlight for me was fact that Hunter was able to join me in San Francisco. We had lots of fun at conference and were super excited to be brought on stage during the conference's closing Q&A with the Elastic Founders.

Will leave you with a pic of Hunter signing canvases for some of our elastic colleagues.

Capturing Monet's Style with a Robot

As we gather data in an attempt to recreate the style and brushstroke of old masters with Deep Learning, we thought we would show you one of the ways we are collecting data. And it is pretty simple actually. We are hand painting brushstrokes with a 7BOT robotic arm and recording the kinematics behind the strokes. It is a simple trace and record operation where the robotic arms position is recorded 30 times a second and saved to a file.

As can be seen in the picture above, all Hunter had to do was trace the brush strokes he saw in the painting. He did this for a number of colors and when he was done, we were able to play the strokes back to see how well the robot understood our instructions. As can be seen in the following video, the playback was a disaster. But that doesn't matter to us that much. We are not interested in the particular strokes as much as we are in analyzing them for use in the Deep Learning algorithm we are working on.

Woman With A Parasol is the fourth Masterpiece we have begun collecting data for. As this is an open source project, we will be making all the data we collect public. For example, if you have a 7Bot, or similar robotic arm with 7 actuators, here are the files that we used to record the strokes and make the horrible reproduction.

Full Visibility's Machine Learning Sponsorship

Wanted to take a moment to publicly thank cloudpainter's most recent sponsor, Full Visibility.

Full Visibility is a Washington D.C. based software consulting boutique that I have been lucky enough to become closely associated with. Their sponsorship arose from a conversation I had with one of their partners. Was telling him how I finally thought that Machine Learning, which has long been an annoying buzzword, was finally showing evidence of being mature. Next thing I knew Full Visibility bought a pair of mini-supercomputers for the partner and I to experiment with. One of the two boxes can be seen in the picture of my home based lab below. It's the box with the cool white skull on it. While nothing too fancy, it has about 2,500 more cores than any other machine I have ever been fortunate enough to work with. The fact that private individuals such as myself can now run ML labs in their own homes, might be the biggest indicator that a massive change is on the horizon.

Full Visibility joins the growing list of cloudpainter sponsors which now includes Google, 7Bot, RobotArt.org, 50+ Kickstarter Backers, and hundreds of painting patrons. I am always grateful for any help with this project that I can get from industry and individuals. All these fancy machines are expensive, and I couldn't do it without your help.

Pindar Van Arman

Some Final Thoughts on bitPaintr

Hi again,

Its been a year since this project was successfully launched. As such here is a recap of how the project went, insight on what I have learned about my own art, as well as a preview of where I am taking things next. This might be a long post, so sit tight.

Some quick practical matters first though. For backers still awaiting your 14"x18" portraits, it should be in the image and time lapse below. If there has been a mix-up and your portrait somehow got overlooked, just send me a message and I will straighten it out. Also look for any other backer awards such as postcards and line art portraits in coming weeks.

A Year of bitPaintr

I can start by saying that I did not imagine the bitPaintr project doing as well as it did. And I have no problem thanking all the original backers once again - even though you are all probably tired of hearing it. But as a direct result of your support so many good things happened for me over the past year. I could tell you about all of them but that would make this post too long and too boring - so I will just concentrate on the two most significant things that resulted from this campaign.

The first is that I finally found my audience. Slowly at first, then more rapidly once the NPR piece aired, people started hearing about and reacting to my art. And the more people would hear about it, the more media would cover it, and then even more people would hear about it. And while not completely viral, it did snowball and I found myself in dozens of news articles, feature, and video pieces. Here is a list of some of my favorite. This time last year I was struggling to find an audience and would have settled for any venue to showcase my art. Today, I am able to pick and choose from multiple opportunities.

The second most significant part of all this is that I found my voice. Not sure I fully understood my own art before, well not as much as I do now. I had the opportunity to speak to, hang out with, and get feedback from you all, other artists, critics, and various members of the artificial intelligence community. All this interaction has lead me to realize that the paintings my robots produce are just artifacts of my artistic process. I once focused on making these artifacts as beautiful as possible, and while still important to me, I have come to realize that the paintings are the most boring part of this whole thing.

The interaction, artificial creativity, processes, and time lapse videos are where all the action is. In the past year I have learned that my art is an interactive performance piece that explores creativity and asks the sometimes trite questions of "What is Art?" and "What makes me, Pindar, an Artist?" - or anyone an artist. This is usually a cliche theme, and as such a difficult topic to address without coming off as pretentious. But I think the way my robots address it is novel and interesting. Well, at least I hope so.

Next Steps

As I close up bitPaintr, I am looking forward to the next robot project called cloudPainter. Will begin by telling you the coolest part about the project which is that I have a new partner, my son Hunter. He is helping me focus on new angles that I had not considered before. Furthermore, our weekend forays into Machine Learning, 3D printing, and experimental AI concepts have really rejuvinated my energy. Already his enthusiasm, input, and assistance has resulted in multiple hardware upgrades. While the machine in the following photo may look like your average run-of-the-mill painting robot, it has two major hardware upgrades that we have been working on.

The first can be seen in the bottom left hand corner of robot. It is the completely custom 3D printed NeuralJet Painthead. Hunter, Dante, and I have been designing and building this device for the last 4 months. It holds and operates five airbrushes and four paintbrushes for maximum painting carnage. The second major hardware improvement can be seen near the top of canvas. You will notice not one, but two fully articulated 7Bot robotic arms. So while the NeuralJet will be used for the brute application of paint and expressive marks, the two 7Bot robotic arms will handle the more delicate details. Furthermore, each robotic arm will have a camera for looking out into its environment and tracking its own progress on the paintings.

Our software is currently receiving a similar overhaul. I would go into detail, but Hunter and I are still not sure of where its going. We are taking and using all of the previous artificial creativity concepts that have gotten us this far, and adding to them. While bitPaintr was a remarkably independent artist, it did have multiple limitations. In this next iteration we are going to see how many of those limitations we can remove. We are not positive what exactly that will look like, but have given ourselves a year to figure it out.

If you would like to continue following our progress, check out our blog at cloudpainter.com. Things are just getting started on our sixth painting robot and we are pretty excited about it.

Thanks for everything,

Pindar Van Arman

Integrating 7Bot with cloudpainter

Could not be happier with the 7Bot that we are integrating into cloudpainter.

The start-up robotic arm manufacturers that make 7Bot sent us one for evaluation and we have been messing around with it for the past week. The robot turned out to be perfect for our application, and also it was just plain fun. We have experimented with multiple different configurations inside of cloudpainter and think the final one will look something like the photoshop mockup above.

At this point here is how Hunter and I are thinking it will create paintings.

Our Neural Jet will be on an XY Table and airbrush a quick background. The 7Bots, each equipped with a camera and an artist's brush will then take care of painting in details. The 7Bots will use AI, Feedback Loops, and Machine Learning to execute and evaluate each and every brush stroke. They will also be able to look out into the world and paint things it finds interesting, particularly portraits.

The most amazing thing about all this is that until recently, doing all of this would have been prohibitively expensive. Something similar to this set up when I started 10 years ago would have been $40,000-50,000, maybe even more. Now you can buy and construct just about all the components that cloudpainter would need for under $5,000. If you wanted to go with a scaled down version, you could probably build most of its functionality for under $1,000. The most expensive tool required is actually the 3D printer that we bought to print the components for the Neural Jet seen in bottom left hand corner of picture. Even the 7Bots cost less than the printer.

Will leave you with this video of us messing around with the 7Bot. Its a super fun machine.

Also if you are wondering just what this robot is capable of, check out their video. We are really excited to be integrating this into cloudpainter.

Its an amazing machine.